There is a growing discussion about the natural resistance towards Artificial Intelligence (AI) and automation (such as robots on an assembly line). Will it take away jobs? Will it become self-aware and realize that humans stand in its way (think Skynet in the Terminator movies…)?

To better answer this question, we need to look the definition of AI first. Britannica defines artificial intelligence (AI) as the “ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.” To evaluate if AI is a friend or foe, there are two factors we have to look at:

Using AI to Automate Processes

Robots and AI allow us to produce things faster, better and cheaper with higher consistency. This allows machines to replace people doing manual labor, e.g. sorting mail, assembling a product, or summarizing a book. AI will be very disruptive for low-cost countries providing low-cost manufacturing to international firms since robots can do this much cheaper. It will also be disruptive to countries with higher salary levels, but not at the same level as for the low-cost countries.

“The threat of technological unemployment is real... For instance, Terry Gou, the founder and chairman of the electronics manufacturer Foxconn, announced this year a plan to purchase 1 million robots over the next three years to replace much of his workforce. The robots will take over routine jobs like spraying paint, welding, and basic assembly.” —MIT Professor Erik Brynjolfsson and research scientist Andrew McAfee wrote in the Atlantic.

Our forefathers probably had the same concerns in the industrial revolution. They saw cars replace horses for transportation and machines replacing people in manufacturing. Did this increase unemployment rates? Yes, but only in the short term since you need less blacksmiths and more drivers, and less factory workers and more machine operators. The impact was a significant jump in productivity - the machines allowed us to produce and deliver more and better products and services.

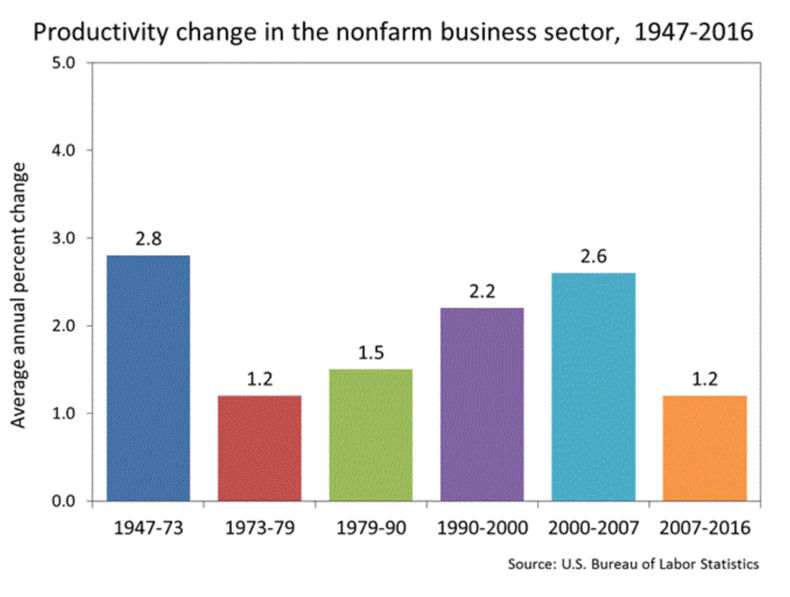

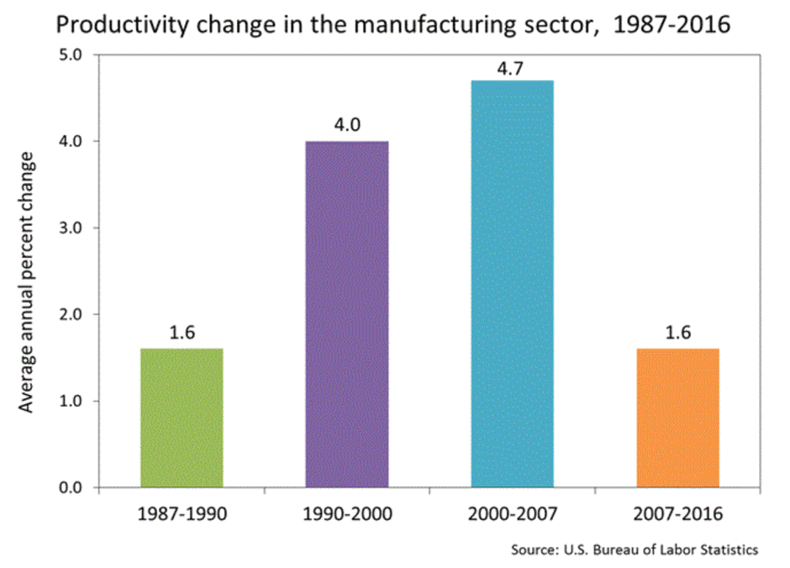

We are now faced with a similar transformation. We need a similar jump in productivity to be competitive and to support a growing population and more senior citizens. The chart below shows the productivity change in the US from 1947 to 2016.

Source: https://www.bls.gov/lpc/prodybar.htm

IT helped to fuel the growth in productivity from the 1979 to 2007, but we have then struggled taking it (or IT) to the next level. Too much of our Enterprise IT budget has been spent on maintaining legacy systems, and they are now keeping us hostage.

The drop in productivity since 2007 is even more visible when looking at US manufacturing. We used IT to improve the value chain, but we are stuck just trying to work harder, not smarter.

Companies like Google and IBM have already realized that this is the next frontier:

“Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we'll augment our intelligence.” — Ginni Rometty

“Artificial intelligence would be the ultimate version of Google. The ultimate search engine that would understand everything on the web. It would understand exactly what you wanted, and it would give you the right thing. We're nowhere near doing that now. However, we can get incrementally closer to that, and that is basically what we work on.” —Larry Page

We need a digital transformation, and robots and AI are therefore an opportunity for us to improve local production and value creation. A company – or country – that invest in robots and AI will grow faster than their competitors. For a country, this may mean a higher GDP with less effort. It means citizens have to work less than they do now. We might in the future have 4, 3, or even 2-day work week with the same – or higher – quality of life.

Automation or Augmentation?

As stated in the book Only Humans Need Apply: “The intent is not to have less work; it is always to allow them to do more valuable work (..) The best investment in intelligent machines do not usher people out the door, but allow them to take more challenging tasks.”

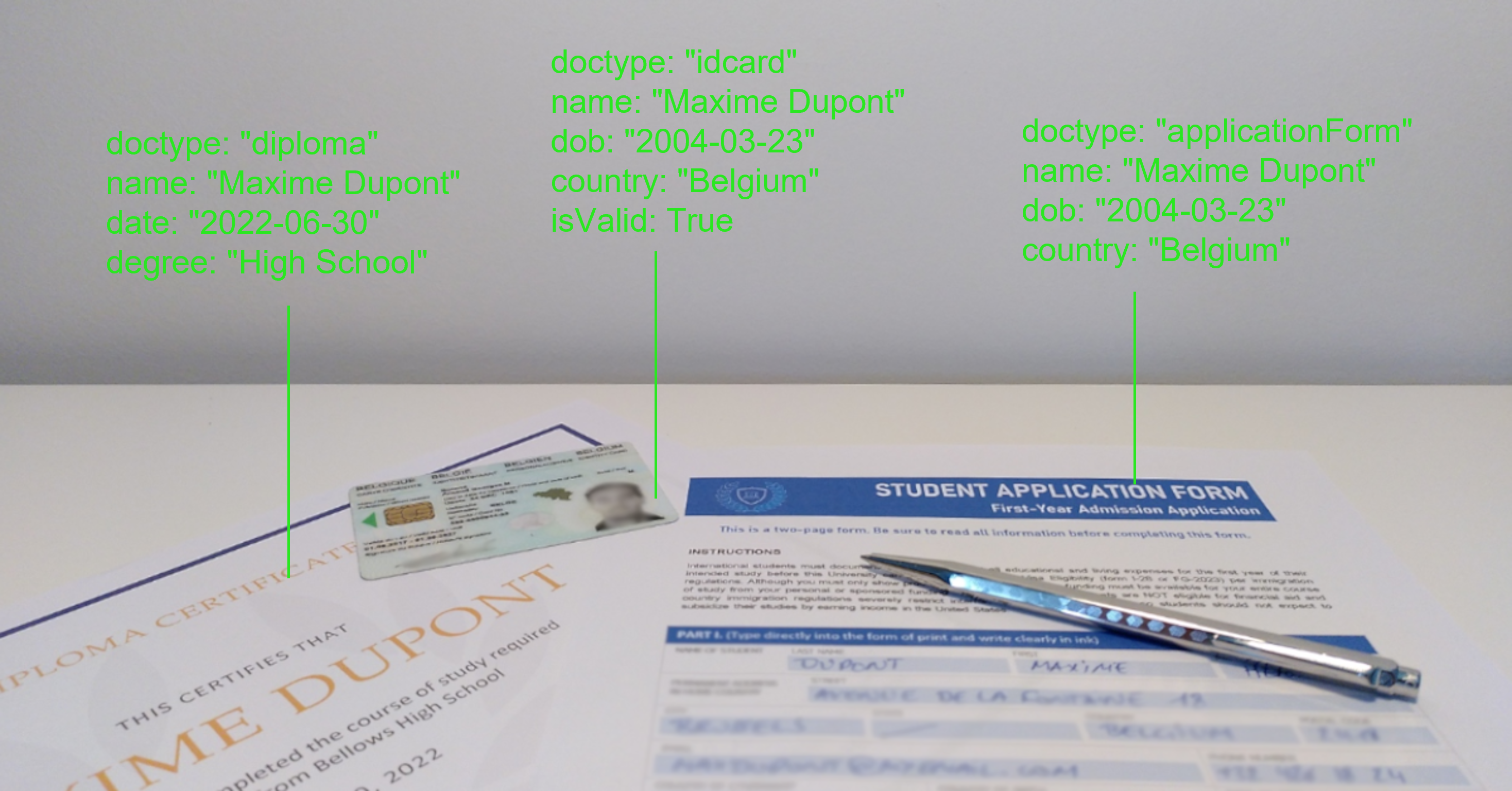

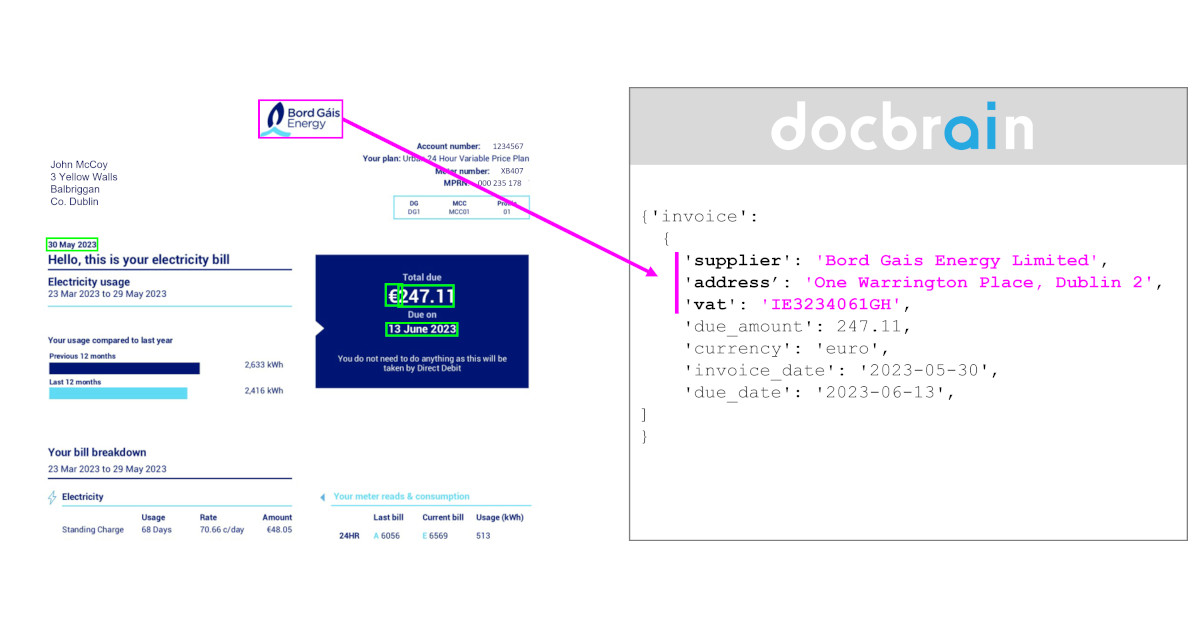

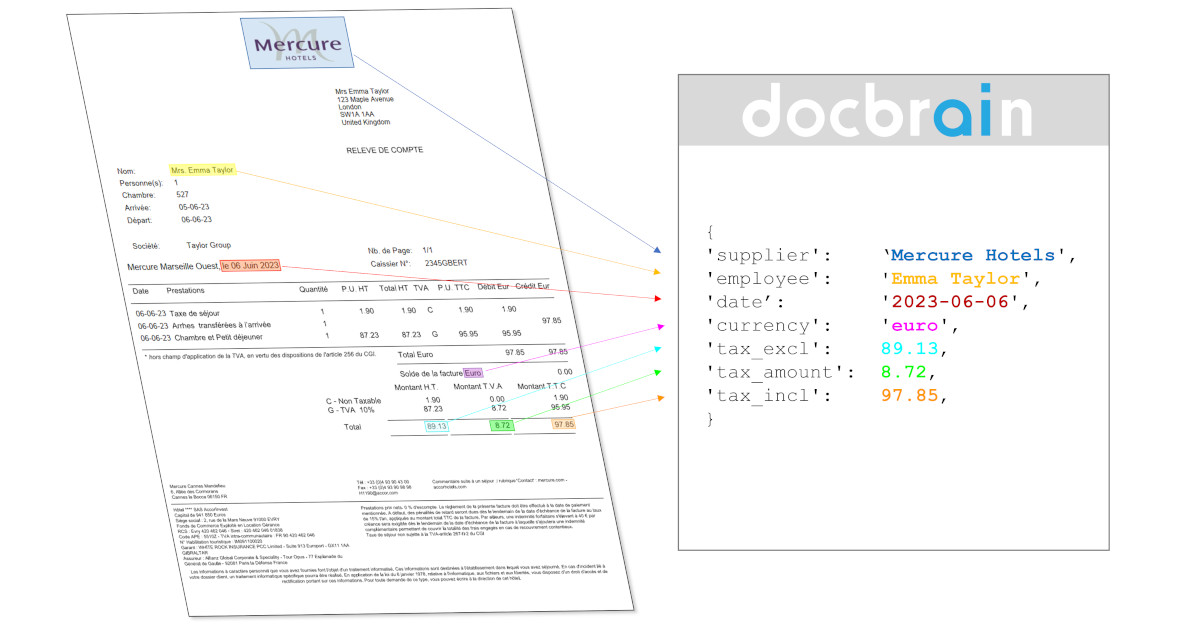

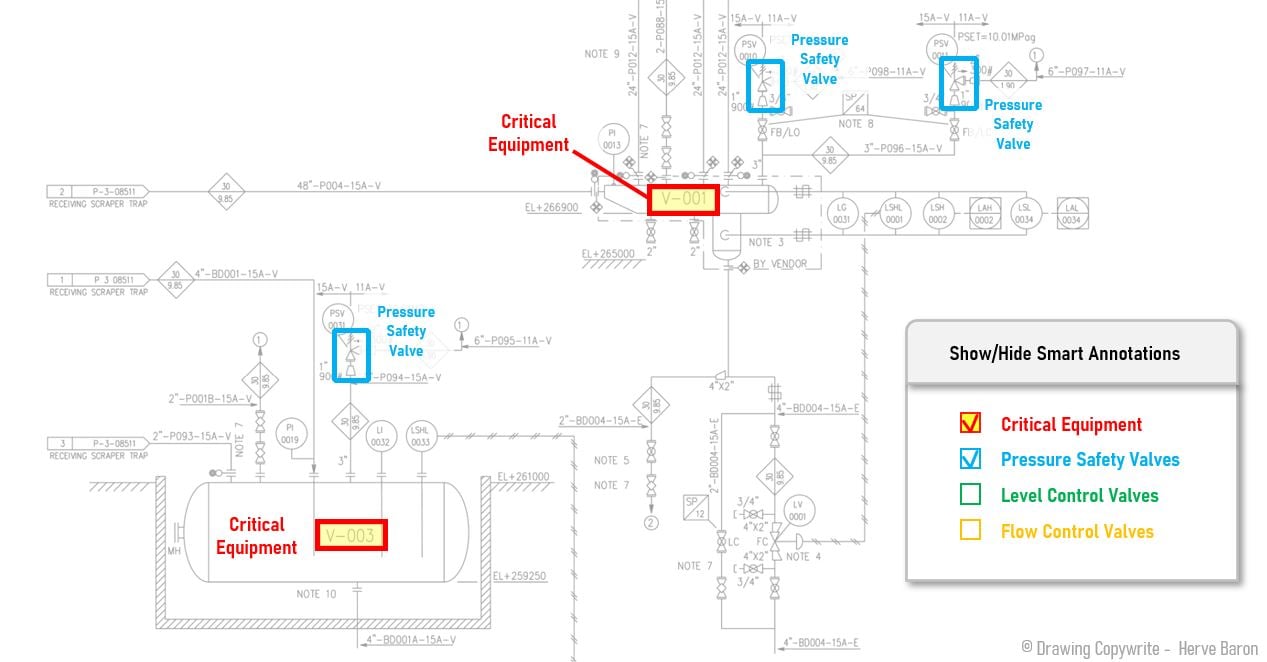

In today's working environment, the proper application of AI needs very specialized – and very human! – people to set up and manage; this requires workers with new skills and, as Moonoia's Wim De Maertelaere mentioned during this year's AIIM ELC Meeting, "AI is not an out-of-the-box solution. It takes expertise to select the most efficient algorithms and to conduct the initial training of neural networks”.

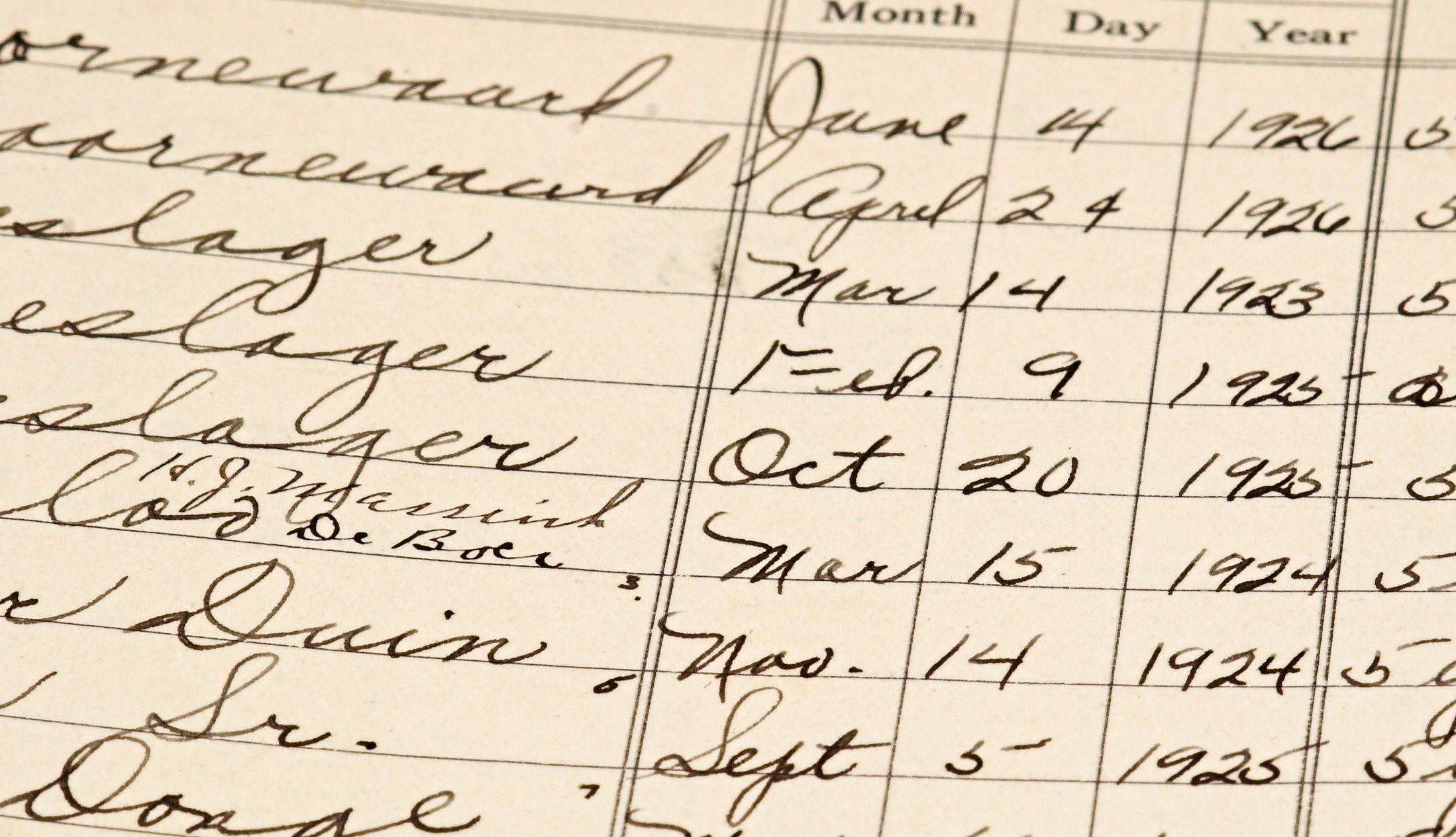

Many of our automated solutions - think software platforms and applications - generate large volumes of data (and create it faster) and augment information retrieval. The challenge of what to do with this data is often still in the hands of knowledge workers. According to the same book, knowledge workers are as important as ever, as many systems which operate on principles of AI still rely on humans to:

- Design, create, and train the AI platforms

- Provide context and make the connection to real-world situations

- Integrate and synthesize information across multiple systems

- Monitor and provide quality assurance (QA)

- Know the machines weaknesses and provide human intervention to bridge the gap

and, finally, convince 7 billion humans to trust a decision made by an algorithm!

Enabling Robots and AI to Learn

Humans learn from their mistakes, but we often forget and get easily distracted. AI will also learn from the past – not only their past, but also from other robots and AI. It will become a lot smarter than us, and some people therefore worry that AI will replace us on the top of the food-chain (even though AI only really need electricity to function).

“Artificial intelligence will reach human levels by around 2029. Follow that out further to, say, 2045, we will have multiplied the intelligence, the human biological machine intelligence of our civilization a billion-fold.” — Ray Kurzweil

“Once computers can effectively reprogram themselves, and successively improve themselves, leading to a so-called 'technological singularity' or 'intelligence explosion', the risks of machines outwitting humans in battles for resources and self-preservation cannot simply be dismissed.” — Cognitive Science Professor Gary Marcus wrote in the New Yorker.

“The development of full artificial intelligence could spell the end of the human race… It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.”— Stephen Hawking

Some entrepreneurs like Elon Musk advocate government regulations to ensure we don't end up in trouble with AI. We can´t stop progress, but we can ensure that we do it the right way – similar to how regulations govern stem-cell research or chemical warfare. We need rules or principles to guide our research and use cases, and a governance body to ensure compliance. This will help us adopt and leverage AI the right way.

“We must address, individually and collectively, moral and ethical issues raised by cutting-edge research in artificial intelligence and biotechnology, which will enable significant life extension, designer babies, and memory extraction.” —Klaus Schwab

Some science fiction writers have already realized this and included such principles in their robots, e.g. robots are not allowed to harm people. The plot of some books and movies are therefore about rogue AI and robots harming people for achieving the overall goal. We need to come together to create rules for how to avoid this evolution. Governments and companies need to commit to these rules, or face the consequences of non-compliance.

Interested in learning more about AI business cases and challenges?

Subscribe to our blog or visit www.moonoia.com.